Assessment 2: NLP303 Natural Language Processing and Speech Recognition

Assessment Task

Design an NLP or Speech Recognition system using transformer architecture. Discuss your area of interest and further and investigate a relevant model from research papers in order to verify the implementation plan for a rapid proof of concept prototype. Please refer to the Task Instructions for details on how to complete this task.

Context

This assessment recognises the tectonic shift taking place when approaching NLP projects in the 2020s. According to Sebastian Ruder, key global thought leader and research scientist in NLP with Google DeepMind, It only seems to be a question of time until pretrained word embeddings will be dethroned and replaced by pretrained language models in the toolbox of every NLP practitioner (Ruder, 2018). Together with the overwhelming demonstrations and transformer technology use by OpenAI, Google, Facebook, Microsoft and Baidu, no reason at all exists for not considering this technology as part of any reasonably sized NLP projects (i.e., beyond toy) applications or research.

Understanding transformer technology and implementations is essential for anyone who works in the NLP field. Therefore, this transformer-centric assessment is essential to undertake in order to:

1. help provide a context to keep up with the continual news updates on transformers, and

2. enter the workplace in any role associated with NLP if only to compare and contrast the new methods of conducting NLP tasks with the traditional, fragile, rules-based approach

Completing this assessment will provide the following:

a) Hands-on experience and understanding regarding how pre-trained transformer models can fit into NLP projects

b) An understanding of the models available through the HuggingFace hub

c) The skills to acquire a pre-trained transformer model with which to execute typical NLP tasks.

The skills developed include the use of open-source transformers and the ability to self-direct learning towards practice with limited instruction. These two skills alone will garner the ability to undertake innovative proof of concepts or a minimum viable project with limited funding while benefiting from the technology investments of tech giants.

This concise background information on transformer technology and importance of the Hugging Face open-source Transformer library provides sufficient background as well as the scale and scope of these models and vocabulary required to undertake this project before commencing projects in a learner or early professional role.

Social media (including Clubhouse, Twitter Spaces and TikTok) and online collaboration tools (such as Slack, Google Docs, Microsoft Teams and Zoom) push the boundaries of natural language to include new forms of expression in the form of emoticons, slang (see urbandictionary.com), and deliberately misspelt and abbreviated words between large groups of people around the world. Coping with existing natural languages and the new formats of social media and online collaboration, state-of-the-art natural language processing tools build on the neural network architectures of the Transformer (Vaswani et al., 2017). However, two fundamental approaches ensure that Transformer models have become the de facto model for NLP. The first one is self- attention, capturing dependencies between sequence elements. Secondly, a dominant and a most important approach in NLP is transfer learning. This paradigm pre-trains models on large unlabelled text corpora in an unsupervised manner. A subsequent stage fine-tunes the corpus using a smaller task-specific dataset. Further evidence of the superiority of Transformers over previous component architectures of recurrent and convolutional neural networks is available by studying the leaderboard of NLP benchmarks (https://gluebenchmark.com/leaderboard) and tracking progress in NLP (http://nlpprogress.com/).

The most popular open source Transformers library (Wolf et al., 2019) NLP machine learning model pipeline generally follows the workflow:

The library enables high level NLP tasks such as text classification, name-entity recognition, machine language translation, summarisation, question/answering and much more. Additionally, Transformers go well beyond handling NLP tasks offering solutions such as text generation for autocompletion of stories (https://transformer.huggingface.co/) as seen with the highly publicised transformer models GPT (Generative Pre-trained Transformer I, II and III).

To understand the variety of transformers beyond GPTs, other popular transformer models available in open source include Google BERT (Bidirectional Encoder Representations from Transformers), Facebook BART, RoBERTa (Robustly Optimised BERT Pre-training) and T5 (Text-to-Text Transfer Transformer). The Transformer models handle a large number of neural network parameters, e.g., ALBERT (18 million), BERT (340 million parameters), the open-source GPT-Neo (2.7 billion), GPT-3 model (175 billion) and Switch scaling to 1.6 trillion (Fedus, Zoph & Shazeer, 2021, p.17). However, this still falls short of the 1,000 trillion (1015) synapses (Zhang, 2019) in the human brain, comparable to the biological equivalent of neural network parameters (Dickson, 2020).

Instructions

Groups for this project are highly encouraged to build the rapid prototype proof of concept using Python, Google Colab with GPU, Hugging Face Transformers, and, if required, PyTorch or Tensorflow library. Any available code you leverage may well dictate your selection of the deep learning library you intend to use unless starting from scratch (not recommended). However, your first assessment showed what is achievable with Hugging Face in just a few lines of Python code without recourse to any additional deep learning library. The predominant architecture of your NLP or Speech recognition system assumes Transformer (Hooke, 2020) or variant centricity (Lin et al., 2021).

The project proposal for your submission will be approximately 1000 words long. As a minimum, your proposal structure should include the following sections and considerations:

1. Abstract of around 150 words or less.

2. The idea that emerges from the area or problem of interest motivating the group to develop an NLP/Speech Recognition system.

3. NLP/Speech recognition transformer-based architecture, e.g., encoder-decoder attention.

4. Background literature encompassing your approach and algorithms/source code available.

5. How does your group intend to add value to the code and data already available?

6. Datasets (if required otherwise pre-trained transformer) used to help develop the system in a train/dev/test split.

7. Qualitative and quantitative evaluation metrics, e.g., outputs, ROGUE or attention plot.

8. Project plan inclusive of work breakdown, key milestones and responsibilities.

Useful Resources for Brainstorming your Project

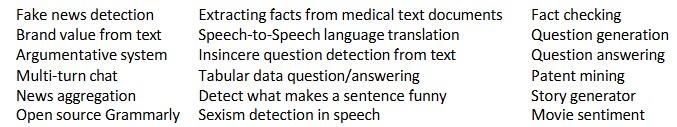

Example systems or tasks using NLP techniques to help you commence your groups journey of inspiration into extracting insights from text include:

While this list is not intended to be exhaustive, you can find plenty of examples by conducting a formal search in Web of Science, Scopus, Google Scholar or YouTube and using search terms such as interesting, innovative or amazing + examples + NLP systems or applications. The mostcontemporary resources appearing within the last 3-5 years are likely to be built on Transformer architecture.

Some NLP research journals, methods, models, applications and datasets which may be useful include:

- Association for Computational Linguistics Anthology (https://www.aclweb.org/anthology/)

- arXiv open-access archive (https://arxiv.org/)

- Github NLP datasets (https://github.com/niderhoff/nlp-datasets)

- HuggingFace Model hub (https://huggingface.co/models)

- HuggingFace Datasets Hub (https://huggingface.co/datasets)

- NLP-progress (https://nlpprogress.com) repository to track the progress in NLP tasks

- Papers With Code (https://paperswithcode.com/methods/area/natural-language-processing)

- Zsolnai-Fehr, K. Two Minute Papers (https://users.cg.tuwien.ac.at/zsolnai/).

- Wikipedia text data (https://en.wikipedia.org/wiki/List_of_datasets_for_machine- learning_research#Text_data).

Owing to the rapidly changing nature of the NLP field and enhancements to models, the publication cycle to implementation using new innovative Transformers has compressed considerably. In light of this, no text book for reference is prescribed, but online documentation should be consulted for the latest release of Transformers (https://huggingface.co/transformers/index.html). Generally, each Transformer model associates with a research paper of some description.

Other areas where you can find online assistance and feedback on Transformers includes stackoverflow.com, reddit.com (e.g., r/deeplearning), quora.com, kdnuggets.com and

https://discuss.huggingface.co . For some communities you might have to specify the search as Transformers NLP or Hugging Face Transformers otherwise you will end up wading through Transformers human-like robot content relating to the film and TV series. If you have issues outside of your control with any existing notebooks or your own first check out

https://github.com/huggingface/notebooks/issues.

Referencing

It is essential that you use appropriate APA style for citing and referencing research, existing code and any existing resources including datasets. Please see more information on referencing here https://library.torrens.edu.au/academicskills/apa/tool

Group Submission Instructions

Each team member should individually upload a group proposal.

You are expected to submit one proposal file each. For the top sheet of your proposal, provide a working project title and a list of all the team members. Name the file incorporating the top sheet and proposal as follows using your name as an individual team member.

NLP303LastNameFirstNameAssessment2.pdf

Submit the file via the Assessment link in the main navigation menu in NLP303 Natural Language Processing and Speech Recognition. The Learning Facilitator will provide feedback via the Grade Centre in the LMS portal. Feedback can be viewed in My Grades.

IMPORTANT NOTE: If incorporated, submit a text file that includes the code(s) of your programs in your submissions.

Before you submit your assessment, please ensure you have read and understand the conditions outlined in the Academic Integrity Code Handbook. If you are unsure about anything in the Handbook, please reach out to your Learning Facilitator.

Are you struggling to keep up with the demands of your academic journey? Don't worry, we've got your back!

Exam Question Bank is your trusted partner in achieving academic excellence for all kind of technical and non-technical subjects. Our comprehensive range of academic services is designed to cater to students at every level. Whether you're a high school student, a college undergraduate, or pursuing advanced studies, we have the expertise and resources to support you.

To connect with expert and ask your query click here Exam Question Bank